Abraham Wald and his work on WWII bomber damage is a really cool story highlighting why we need to always challenge the way we look at data and consider what our data is really telling us, and what data we may be missing.

jim o'neill | austin texas

Abraham Wald and his work on WWII bomber damage is a really cool story highlighting why we need to always challenge the way we look at data and consider what our data is really telling us, and what data we may be missing.

Plotting all you data can be hard. Some think it’s pointless. Some think it’s a waste of time. Some think generic dashboards are better. Some think logging is more precise.

Nothing can replace a well designed scatter plot as a critical diagnostic tool.

Continue readingIf you are into Entity Analytics (like I am) then a cursory understanding of Zipf’s Law should be a tool in your bag. It’s a really cool mathematical relationship that governs most of the distributions you will encounter in our line of work, mainly dealing with natural data sets that follow a consistent frequency distribution.

A Fermi Estimate or Fermi Problem is named after physicist Enrico Fermi developed the method while estimating the yields of atomic bomb blasts. He used an estimation method to estimate the a method for scientists or engineers to come up with a rapid estimate for an answer to a problem where a precise measurement is not possible.

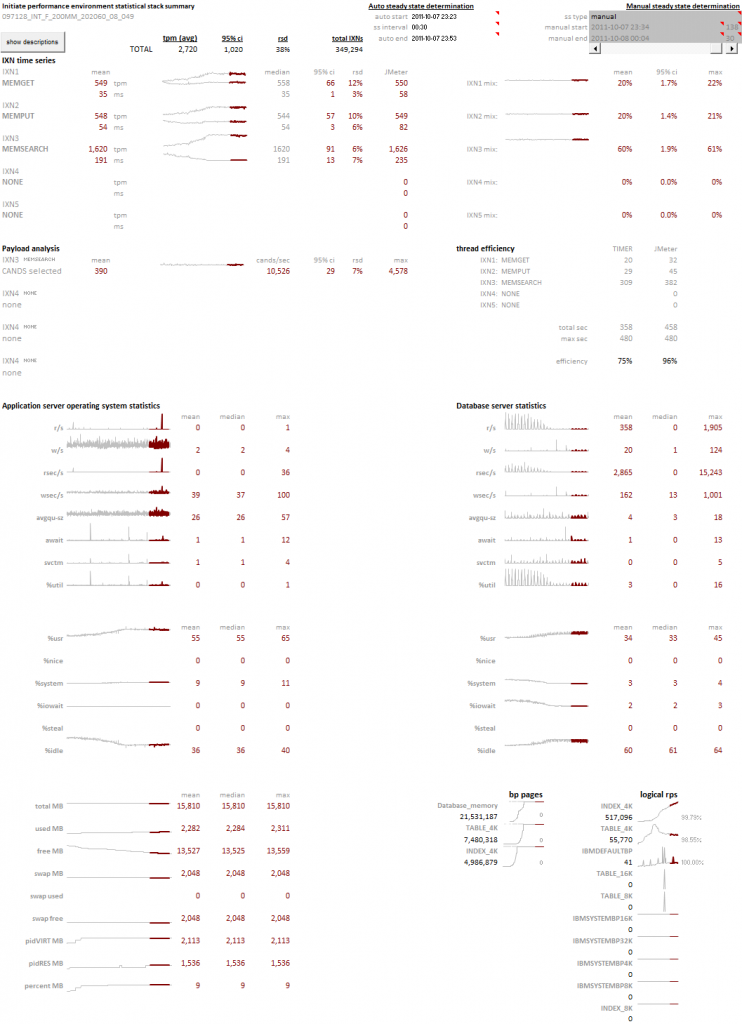

Yes, Ambari Metrics is a horrid, terrible information presentation platform that needs a major overhaul. I am not sure who it was designed for, but it was not for data scientists interested in cluster performance. I really appreciate the work Hortonworks has invested in making hadoop more approachable as a platform, but I was really disappointed in the gutting of Ganglia/Nagios capabilities when the replacement, Ambari Metrics, was just not capable of providing the diagnostic capabilities and layered access to data that Ganglia provided.

Here’s the monitoring console I’m unhappy with:

Continue reading I am a dart frog addict…er…hobbyist. You can read more about my involvement in the hobby here, but one aspect of the hobby that intersects with my analytics background is weather data. I try to locate weather stations near the regions in South America where the frogs I keep were originally collected. This has led to some interesting visualizations, including this one, regional weather data for Sao Lourenco:

I am a dart frog addict…er…hobbyist. You can read more about my involvement in the hobby here, but one aspect of the hobby that intersects with my analytics background is weather data. I try to locate weather stations near the regions in South America where the frogs I keep were originally collected. This has led to some interesting visualizations, including this one, regional weather data for Sao Lourenco:

I’m a big proponent of Edward Tufte and his information design principles. I’ve included a few key concepts in this post from Tufte’s writings that I have found useful in developing my own visualization techniques and strategies.

Edward Tufte has written extensively on the use of small multiples and sparklines. These concepts, the use of many small plots using a consistent pattern allows you to quickly survey large amounts of data for abnormal patterns. The concept focuses on how our eye can parse large numbers of micro plots and quickly assess an abnormal pattern – it’s an innate ability that all people have. We can leverage this ability by creating visualizations that present consistent patterns to the viewer, and subtly illustrate abnormalities that anyone can observe.

Continue reading

Time for a new twist on an old concept…the Gantt Chart. Tried and true, we’re used to seeing it used for old waterfall planning models. However, we can take a project chart for a large scale POC of an MDM application housing 250 million records on a new clustered database. The schedule was extremely aggressive with an original target of three weeks, which, due to delays in database deployments and JDBC/ODBC connectivity issues stretched to 5 weeks.

Can you build a visualization that conveys the complexity of a project involving a dozen technical people, hundreds of tests, with distinct goals?

Hey, I’m a data guy – you know what the answer is going to be…

© 2025 tertiary analytics

Theme by Anders Noren — Up ↑